Project MYhouse, a smart dollhouse with gesture recognition

During the Winter Quarter of 2018 at MSTI / UW, my team developed MYHouse, a smart interactive doll house that people can interact with by moving a “magic wand” in the air to make it perform certain actions. The dollhouse was created from scratch by using Laser Cutting, 3D printing, creating an electrical circuit and programming it. The whole system utilizes data processing and Machine Learning to control various functional elements in the house.

(“MYHouse” stands for “Maks and Yifan House”, first names of the people in my team)

The code for the machine learning algorithms and the whole system is open sourced at https://github.com/msurguy/MYHouse-smart-dollhouse

What it can do:

We set out to make the experience of controlling the house to feel like magic. What can remind us of magic other than a magic wand? We thought that using a magic-wand like device would help us interact with a house full of electronic devices in a more intuitive way than a regular remote or voice controls. The smart doll house has the following interactive features:

- Fan (on, off, normal speed, double speed)

- Lights (M, Y letters are separately activated)

- Shutters (open or close)

- TV (on or off)

Each feature of the dollhouse is activated by performing one of the pre-defined gestures with the motion controller. It works like magic: you wave the controller in the air, and watch the house activate or deactivate the feature you thought of.

Please check out the demo of the MYHouse in action below:

How did we build it?

We spent about two months on this project working through the following phases:

- Researching about methods and sensors for gesture recognition

- Understanding how accelerometers work and what data they provide

- Building a low fidelity prototype with Arduino, plywood and a few simple sensors

- Learning about PSMove motion controller and getting it to provide motion data to our computers

- Working on machine learning algorithm, collecting data and testing our machine learning system

- Testing various methods of joining plywood together and designing a doll house in CAD

- Putting together final circuit

- Writing software that combines machine learning for gesture recognition and responding to gestures by means of actuators and lights

- Building the final product out of plywood, 3D printed objects, Raspberry Pi, sensors and actuators

We had three milestones and several rounds of feedback from the UW faculty and guests. The feedback and research discoveries led us to slightly modify the product and its functionality to provide better user experience.

Let’s look at what technology makes a house like this work:

Electronics:

For the electronics, we decided to use off-the-shelf components that are affordable and easily sourced. Since our project involves machine learning, we wanted to put more effort into the software rather than building our own hardware from scratch.

The electronics of the smart doll house consist of (links to the components we used are provided):

- Raspberry Pi ($35 Raspberry Pi 3 or $10 Pi Zero W worked equally well)

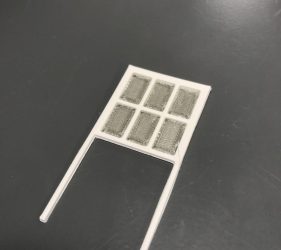

- 2 x 3.7g servo motors for the shutters

- 1 continuous rotation servo motor for the fan

- 5in HDMI screen for the TV and as user feedback mechanism

- PlayStation Move controller (3 DoF accelerometer and 3DoF gyro with bluetooth connection)

- RGB Leds (28 x WS2812B)

- Servo extension wires

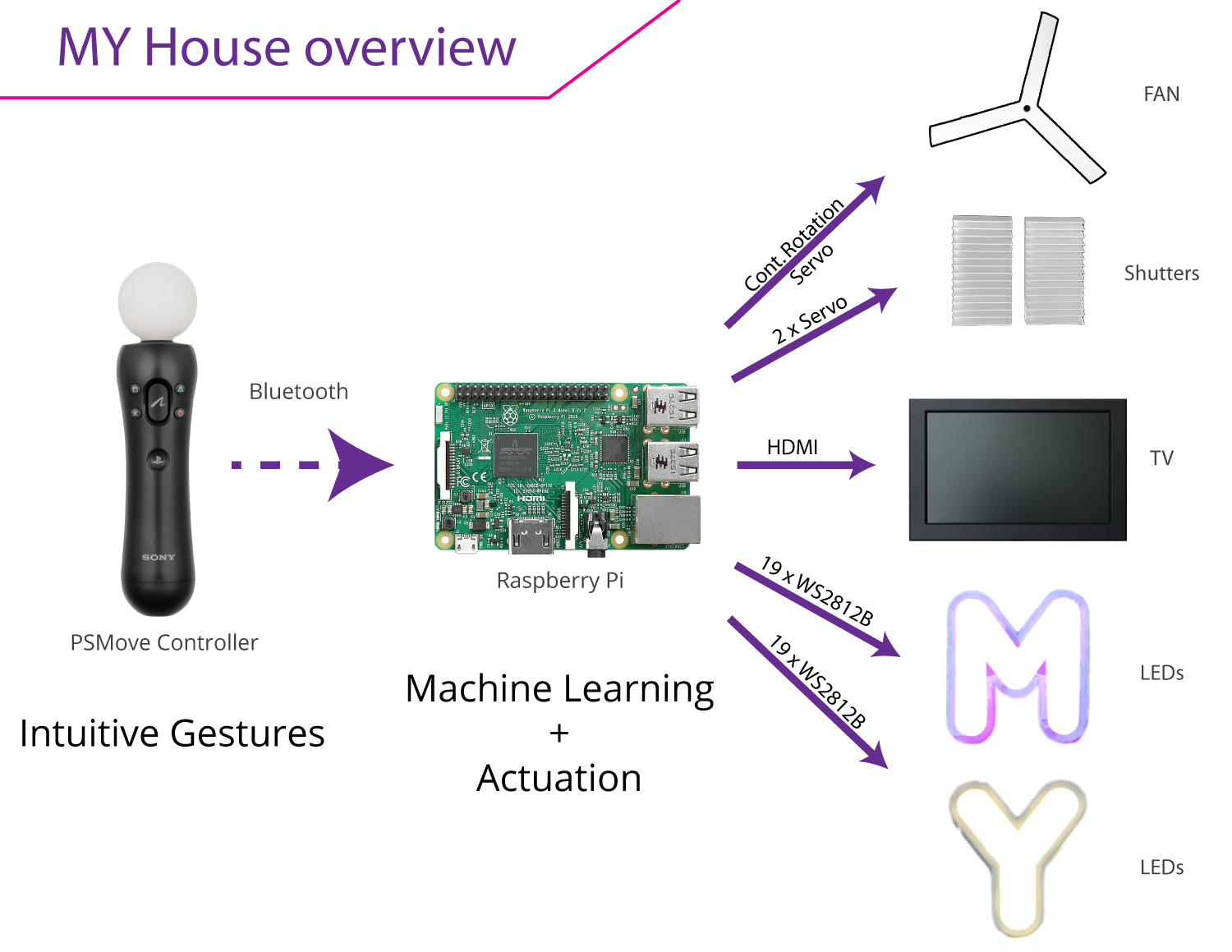

Here’s a diagram of the components and small description about the interfaces they use to interact with each other:

With the components connected together, we made the house interactive by the use of PSMove bluetooth-enabled motion controller and machine learning program running on the Raspberry Pi. We developed a neural network that learns user-defined gestures after 30 samples and has a high success rate (over 90% in our tests) with inference response times as low as 15-30 msec on Raspberry Pi Zero (!!!). Here’s how we did it.

Machine Learning

As a part of the process, we have read dozens of research papers on using sensors and machine learning in order to come up with a solution that is simple enough to build, performs well and can work on a small inexpensive microcomputer (so called “AI at the edge”). Reading the papers helped us understand the following:

- Which ML algorithms work well for gesture recognition

- What is the number of data samples necessary to train various algorithms

- What gestures perform well even when they’re reproduced by different people

After all of this research, we were surprised to find out that a classical neural network architecture, Multi Layer Perceptron, can work really well for gesture recognition if provided enough data samples. We started to explore this idea further and started gathering training data for the gestures. Some of the decisions we made through this process were:

- Duration of each gesture should be no longer than 2 seconds

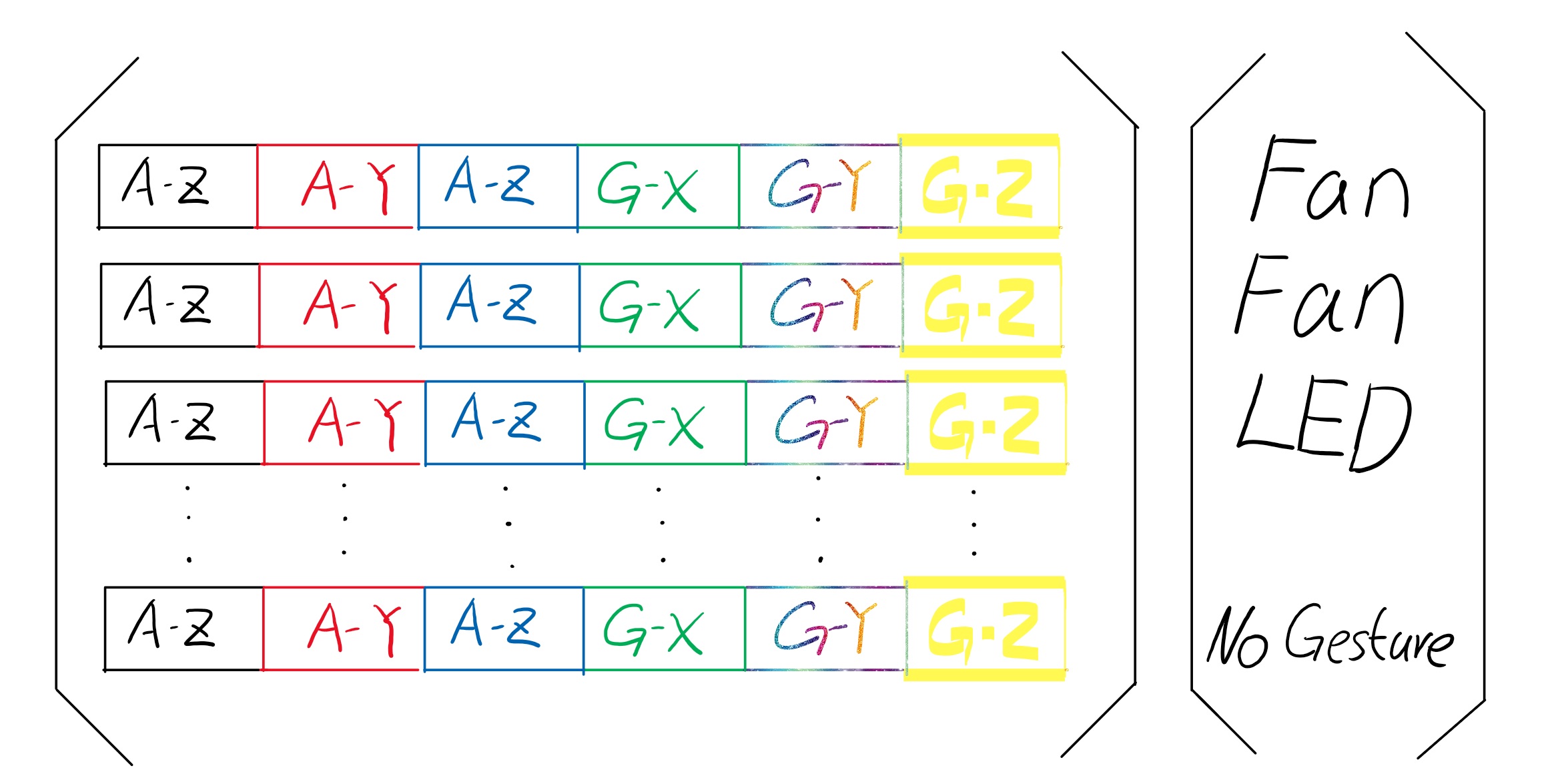

- We only need accelerometer and gyro data (x,y,z for each), no magnetometer readings are necessary

- Removal of gravity vector is not necessary

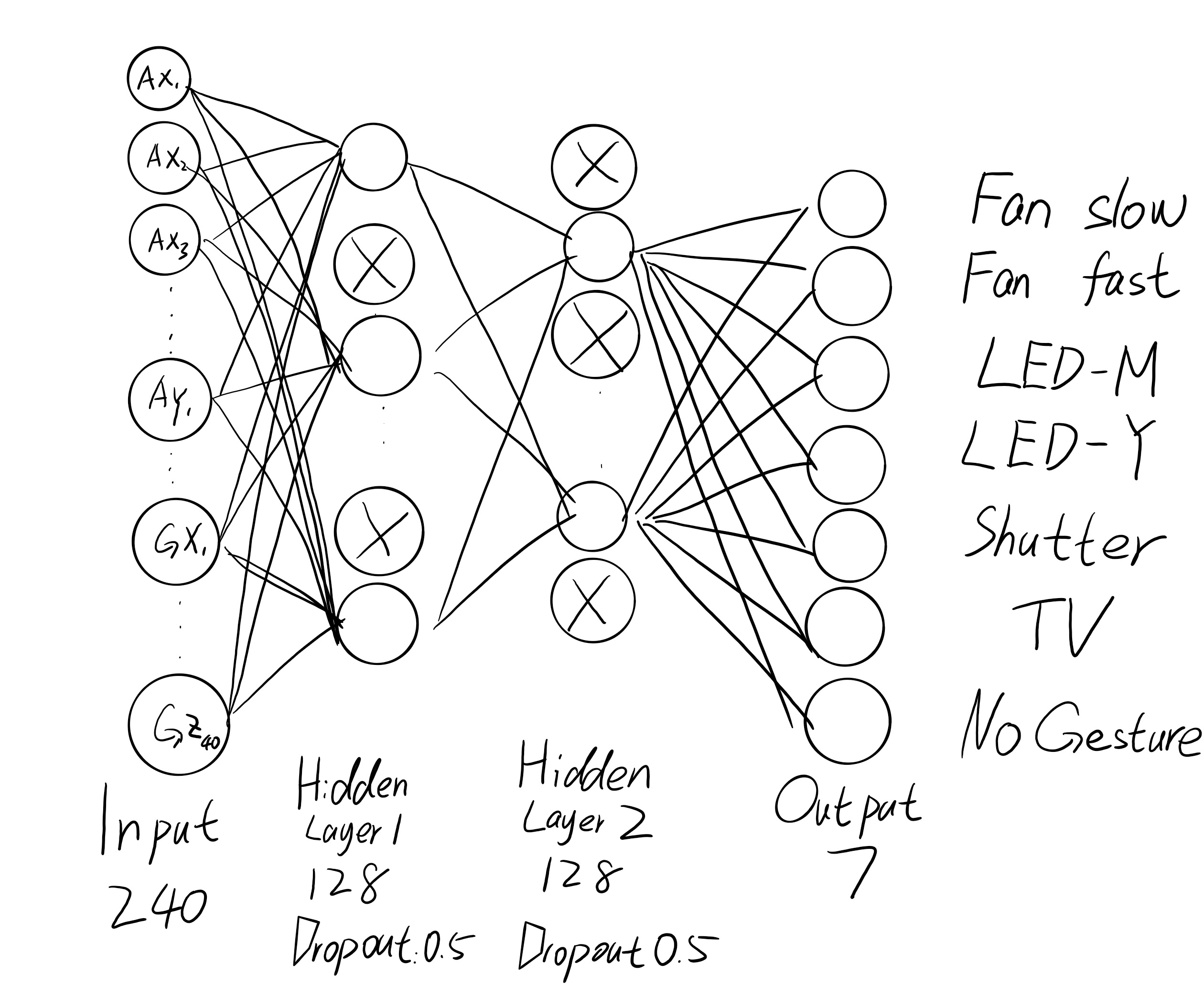

- The number of hidden layers in the neural network should be minimal (1 or 2), with 128 neurons for each hidden layer

- The use of various optimization techniques such as adding dropout can increase inference performance

- Total number of data points for each gesture should be 240 (6 values gathered at 20 Hz for 2 seconds)

- The number of possible output classes for the network should be equal to number of actions we want to perform, plus “no gesture” class

With that, we built the neural network with the following structure:

The data matrix of our neural network looks like this:

To train our network, we made a lot of experiments to see how much data was optimal, and what kind of data the network was able to generalize well. After hundreds of tests we determined that the optimal number of gesture samples was in the range of 20-25 and the network works really well for the users that trained it (over 95% accuracy), sort of a nice benefit for our product. Because this network is very simple and doesn’t need a whole lot of data to be trained, it can even be trained on Raspberry Pi itself, so the data never leaves the device, making the whole system more secure and not require internet connection.

Accelerometer and gyro

The main component of our smart dollhouse is the motion sensor, it measures acceleration and gyroscope readings at a high rate. During the course of the project we had to understand how the accelerometer and gyro work, which sensors are available on the market, what parameters they have and their applications.

We tried out the following sensors and got them to work with Arduino or Raspberry Pi:

- MPU 6050 (was easy to get to work via i2cdevlib library)

- BerryIMU (has good support for Raspberry Pi)

- PlayStation Move controller (thanks to PSMoveAPI this can work with Raspberry Pi pretty well)

Initially, we wanted to build our own motion controller (magic wand) from scratch, but due to time constraints we had to decide on a controller that already has wireless communication, battery management, a few buttons, accelerometer and gyro sensor built in. After some search for open source code support, we decided that PlayStation Move controller will be a great controller to work with our system. It is reasonably priced, has all the sensors that we care about, has built in Li-Po battery and charger, and has lots of buttons. The PSMoveAPI project was the cornerstone of getting the controller to work with Raspberry Pi quickly and saved us a lot of time.

To understand the data coming out of the motion controller, I wrote some software to visualize the data (I made it open source here). I used Python and WebSockets to take the data streamed from the motion controller in real time and to turn it into the graph that we can analyze and understand. Here’s a video of the data visualization at work:

After capturing the data from the accelerometer in various spreadsheets, we were ready to train our network and try out many parameters affecting the training (gestures themselves, network parameters).

Prototyping:

To get to the final working product we had to do many rounds of prototyping and iteration. We started out with a few cardboard prototypes of the house to understand what scale we should be designing for. After 3D printing a couple pieces of furniture of different sizes and placing them in boxes of different sizes, we got a better idea of the size that we should work towards.

We then built a section of the house that demonstrated some of the functional items (lights, fan, shutters) working with an Arduino to get an idea of how things fit together and what “wow” factor they bring when people interact with them. Next, we consulted my wife who is an architect, about the floor plan and the size of the final prototype.

With the design in mind, we drew the plans in 3D modeling software, laser cut cardboard and glued all pieces together. After confirming the sizes and making sure pieces fit nicely, we laser cut the pieces out of plywood and made use of snap fit to join the pieces.

Finally, we painted each piece of plywood and cardboard with one of the three colors (white, metallic, black), to better separate the visual structure of the house and to make it look more finished. Enjoy the pictures from our fabrication process below:

After the house was put together with the painted pieces of plywood and cardboard, we ran the wiring and glued functional items in their locations, and connected Raspberry Pi to all of the electronic components. Finally, we uploaded the machine learning software and tested everything together.

Here’s a Power Point presentation about the whole project, it has a lot of pictures and videos of the build:

Let me know if you have any questions about this project!

UPDATE: I published the code for the whole system on my Github at https://github.com/msurguy/MYHouse-smart-dollhouse

References:

Salman Cheema, Michael Hoffman, Joseph J. LaViola, “3D Gesture classification with linear acceleration and angular velocity sensing devices for video games”. February 2013

SeleCon: Scalable IoT Device Selection and Control Using Hand Gestures

3D Gesture classification with linear acceleration and angular velocity sensing devices for video games

2 comments