Research essay: The History of Processing

Introduction to generative arts and Processing

In this research essay, I will talk about the history and future of a particular technology called “Processing”, that is often used in context of computer arts and more specifically, generative arts. I will provide some background about generative art, tools like Processing, their capabilities, and implications of the technology. How did Processing come about? Who was leading its advancement and development? Who cares about it? What forces shaped it to be what it is now? How did it affect the world? What’s next for Processing and similar technologies? You will find answers to these and to many other questions about Processing as you read through this paper. Let’s start with some definitions.

What is “generative art”? According to Wikipedia, “Generative art refers to art that in whole or in part has been created with the use of an autonomous system” [1]. Generative artworks created by the autonomous systems are the ones where the system (non-human) generates artistic output based on some algorithms or ideas from the artist controlling the system. The history of the term “generative art” goes back to 1965, when two pioneers of computer art, Frieder Nake and Georg Nees, independently exhibited some of the earliest examples of generative art[2]. After that, increasingly complex generative artworks and widespread recognition of this new art form was achieved. Architects, musicians [3], designers, computer scientists, video producers, roboticists, and others have been known to use computational and generative arts to create new designs, artworks, songs, audio-visual and other experiences.

Over the years, many methods of creating generative or computational arts were tried. Most of them involved writing complex code in a way that was not easily approachable by newcomers to programming. Things started to shift around the year 2000, after John Maeda’s publication of “Design By Numbers”[4], a book and simplified programming language for creation of visual arts. One of the technologies following this publication and enabling people to interact with the medium of generative arts to this day, is a tool known as “Processing”.

Processing is an open source multi-platform development environment for visual programming. Inspired by simple programming languages like BASIC and Logo, it provides an entry point to programming by means of manipulating graphics and sounds. Processing is used in schools, universities, and as a tool of the trade by professional artists and programmers. As of March 2018, about 250,000 active users use Processing [5].

History of Processing

Processing was created at the Aesthetics + Computation group within MIT Media Lab by Benjamin Fry and Casey Reas in the early 2000’s as a successor to John Maeda’s visual programming language and tool called DBN, “Design By Numbers” (Fry B. , 2004). The new tool, Processing, has been in active development since then and continues to be used and expanded by its community to this day. What are the roots of Processing? What decisions were made in order to arrive at what is now known as Processing? Who was involved? Let’s take a look at the archives to discover the answers to these and more questions.

Since the invention of computers, telling a computer what to do required a large amount of very special knowledge. Computer programmers of the 1960s-2000s needed to have years of extensive training in order to write programs. The barrier between non-mathematically inclined people and programming was too great to overcome for those who wanted to program but lacked the necessary training. This problem was studied by a few universities and organizations and as a result, by the end of the 1990s, multiple attempts at lowering the barrier for teaching programming to people that are visually (but not mathematically) inclined were made. One of the first attempts in this domain was made by Seymour Papert, Cynthia Solomon and Wally Feurzeig through introduction of “Logo” programming language in the 1960s.

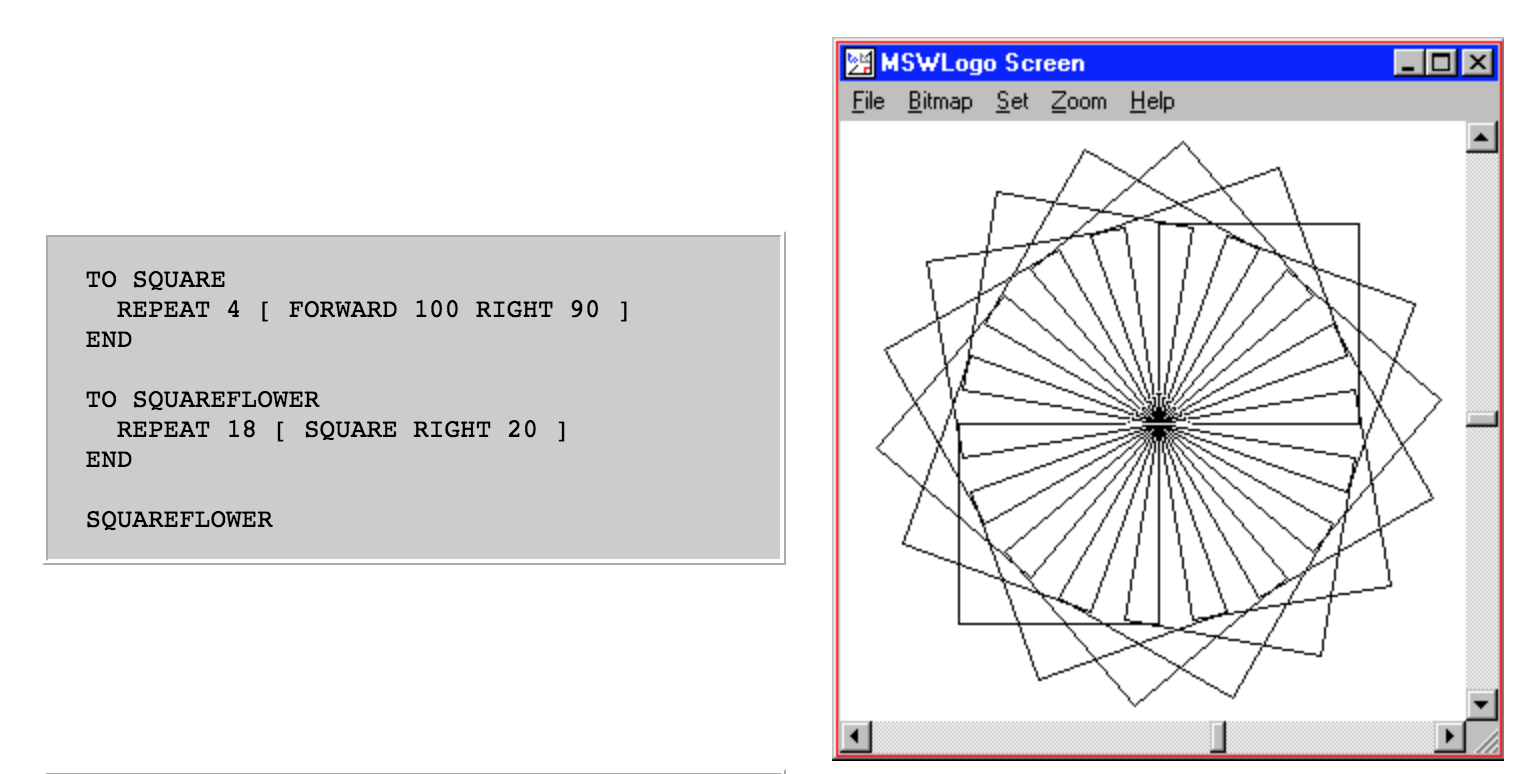

One of the most used and notable features of Logo was the introduction of so-called turtle graphics. Using this method of interacting with the computer, a programmer can instruct a real or a virtual object (“turtle”) by programming its behavior, specifically by programming these three distinct attributes: location, orientation, and the state of a pen (up/down and what color to draw with). Programming the turtle in turtle graphics is like writing out instructions for a person looking for a hidden treasure: take 20 steps forward, turn left 25 degrees, take 15 steps backward, put the pen (or shovel) down and keep walking for 20 more steps, leaving a trace on the ground, etc. With these simple commands, a wide variety of drawings can be achieved. For example, here’s a program that draws a few squares rotated around the center, with only 7 lines of code:

Screenshot of a simple Logo program and its output

The turtle graphics feature of Logo was so popular, that many other software packages offered it and made extensive use of the concept[6]. The existence of compact pen plotters combined with turtle graphics gave opportunity not only to beginner programmers, but also to artists that could create artworks with code, making it possible to draw complex drawings with a pen in the real world just like programming the behavior and the state of the pen attached to the virtual “turtle” in turtle graphics.

As the methods of creating graphics using the computer evolved, they did so in parallel with ways of programming, but without intersecting more closely. Tools like Photoshop were built by large corporations and did not provide ways for regular users to quickly program some new features, while programming environments like Logo were too limited in their capabilities. In other words, if you were to learn programming graphics in the 1990s, you would need to know quite a bit of computer science in order to draw and animate a few hundred circles on the screen, or you would need to pay thousands of dollars for software that could do this for you in a limited fashion. In order to remove this gap and to create a more user-friendly way of visual programming, new ways of programming were explored at research institutions like MIT. One of the potential solutions was presented by John Maeda, a professor at MIT Media Lab, in 1999. Project called “Design By Numbers” (DBN) and first version of the software with the same name was aimed at designers and artists that wanted to learn programming but did not have the extensive training to do so.

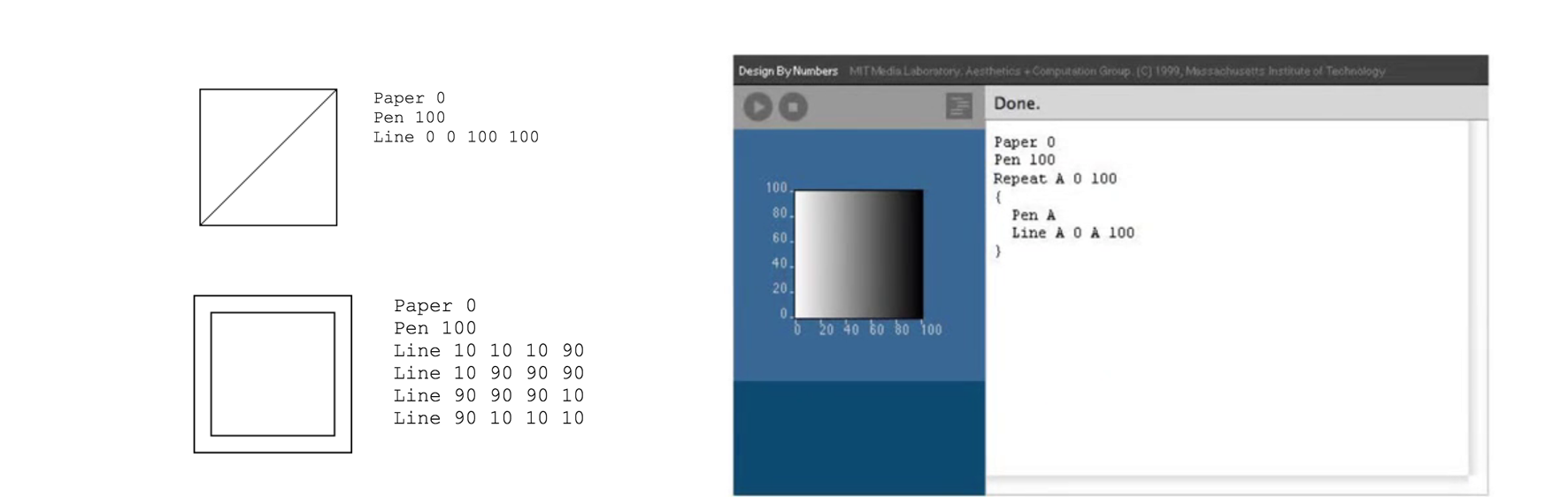

“Design By Numbers” was a book and accompanying software package that attempted to bring a new approach to programming by providing a simplified code editor and new programming language that is focused on creating visual representations of the programs. This new language was designed to be approachable by people not familiar with programming: artists, designers, students that do not have computer science background. A user programming with DBN language would find simplified syntax that is akin to writing programs with BASIC or Logo language where each line of code consists of an action followed by a modifier or parameter. Some example commands of DBN language are:

- Paper 0 (sets the color of the virtual “paper” to white)

- Pen 100 (sets the color of the pen to black)

- Line 10 10 10 90 (draw a line starting at coordinates 10, 10 and finishing it at 10, 90)

- Repeat A 10 100 (repeat something 90 times from 10 to 100, saving the current iterator into variable named “A”)

With these simple commands, the user can quickly create drawings of variable complexity, from simple lines on the screen making a square, to gradients and beyond[7]:

Some examples of using DBN’s syntax to create lines, squares and gradients.[8]

From the get-go, DBN followed the following principles developed by Maeda over a few years of research:

- The language syntax should be easy to understand, removing frustrations often associated with writing programs

- The process of programming should be similar to sketching on a napkin, where each iteration is easily disposable, and there’s little effort in starting from scratch

- The software of this kind should work on multiple platforms and be free to use

- Trying out each iteration of the program should only take an instant

In the foreword of the “Design By Numbers” book, Paola Antonelli who at the time was an associate curator of The Museum of Modern Art, said the following about the book and process described in the book by John Maeda: “With this book, Maeda teaches both professional and amateur designers a design process that paradoxically has a hands-on, almost arts and crafts feeling. His approach to computer graphic design is not different from an approach to wood carving.” (Maeda, Design By Numbers, 1999)

While DBN started to gain initial traction, not everyone saw value in this new combination of design and programming language. For example Roy R. Behrens from the Department of Art at the University of Northern Iowa wrote the following about the book: “Unfortunately, the layout of the book is so unexceptional (particularly the dust jacket, which might have been used in a powerful way) that it is unlikely to convert any graphic designers, who create far more complex forms intuitively, with little or no knowledge of programming. As a result, it may only reach those who need it least, meaning those who are already straddling the line between art and mathematics, between graphic design and computer programming”. (Behrens, 2000)

DBN as a concept was powerful, but its execution was too basic to be adopted by its intended audience. Some of John Maeda’s students saw the potential in this new technology and volunteered to build its next iteration, implementing changes according to the feedback received from the initial users. Benjamin Fry and Casey Reas emerged as the ones advancing “Design By Numbers” concept and making iterative improvements to the software platform over the next few years. John Maeda mentions on the DBN website: “DBN is the product of many people. Benjamin Fry created DBN 3 and 2. DBN 1.0.1 was created by Tom White. The original version DBN 1.0 was created by John Maeda. The courseware system was developed by Casey Reas, and translated to Japanese by Kazuo Ohno. Other people that have contributed to DBN development are Peter Cho, Elise Co, Lauren Dubick, Golan Levin, Jocelyn Lin, and Josh Nimoy.” (Maeda, Home Page, 2001).

Sometime in 1999 and 2000, Benjamin Fry started working on a more powerful version of DBN that was departing from overly-simplistic DBN tool. Maeda is found saying: “The then voluntary caretakers of the DBN system, Ben Fry and Casey Reas felt that they had the right designs for a new solution. It is called “Processing.” And it is still going strong today in a way that continues to pleasantly astound me.” (Maeda, Design By Numbers , 2005). Benjamin Fry spent the next year patching DBN and working on a new tool from scratch. The new tool with the name of “Processing” was released some time in 2001. In his thesis titled “Computational Information Design”, Benjamin Fry explains the name given to this new platform:

“At their core, computers are processing machines. They modify, move, and combine symbols at a low level to construct higher level representations. Our software allows people to control these actions and representations through writing their own programs. The project also focuses on the “process” of creation rather than end results. The design of the software supports and encourages sketching and the website presents fragments of projects and exposes the concepts behind finished software.” (Fry B., 2004)

Processing was using the most successful features of DBN such as: easy to learn syntax, easy distribution (download and play), easy interface (blank sketchpad, very few buttons and menu items). Under the hood, this simplicity was covering a wide variety of complex decisions. To make the tool easily distributable over the web and to make it work even without downloading, it had to be built with a technology that was supported by the web browsers and could work in standalone mode without a browser. At the time only a handful of technology choices were present in order to build interactivity that was sought from Processing, some of them were:

- Macromedia Flash (runs in the browsers, somewhat cross-platform, great graphics/sound support)

- Macromedia Director (runs as a standalone application, great graphics/sound support)

- Javascript (runs in the browsers only, cross-platform, very limited graphics and sound support)

- Java and Java applets (runs in the browsers and standalone, cross-platform, limited graphics support)

After considering these and other options, Benjamin Fry settled on using Java as the central technology that Processing would be built upon. In his thesis, he writes about the pros and cons of this choice in more detail (Fry B., 2004). This necessary choice would later impact a lot of politics behind Processing and have widespread implications on its success, community and its history.

After the release of Processing, Casey Reas started to build a community around it. A website with documentation for the language syntax, forum and showcase of the tool was established over the period of few months in 2001 – 2002. The website was well organized and had a clear design to it, welcoming the newcomers and providing clear directions on how to get started. Originally, the domain name for the website was at proce55ing.net because processing.net or processing.org were not available[9]. Users also referred to Processing as “p5” as an abbreviation.

The forum that was established soon after initial release of Processing played a major role in its development and success. Casey Reas and Benjamin Fry spent a lot of time answering questions about Processing, taking feedback from users and engaging with the community. On the forum, users from all walks of life reported software bugs, requested new features, talked about their code, shared code, sent complaints and gratitude. To get an idea of the early users of Processing, the forum topic with the name “Introductions” was started[10]. It revealed what kind of people had interest in computational design. Talking about the new users and the forums, Benjamin Fry writes in his thesis:

“In the past year, the number of people to sign up and download the software has jumped from just over 1,000 (in June, 2003) to more than 11,000 (as of April, 2004). Thousands have signed up and use an online discussion area, where new users can get help from more advanced. Users are encouraged to post their latest work and share the code for their sketches as part of a worldwide studio” (Fry B., 2004)

Over the first three years after the initial release of Processing, a few dozen of its power users and influencers started to emerge, attracting more new users curious about the paradigm of computational art and computational design. Some of the notable power users were:

- Jared Tarbell[11] (who later went on to cofound Etsy.com)

- Robert Hodgin (who later cofounded a framework similar to Processing)

- Toxi (who later built ToxicLibs)

- Mario Klingemann (artist, author of Nodewerk, visual programming environment)

- Aaron Koblin (who later cofounded WITHIN, a virtual and augmented reality company)

The works created with Processing by these and other power users in the early years of Processing were so impressive that they were exhibited in museums throughout Europe, Japan and the Americas. Below you can see an image recreated on my computer from a Processing “sketch” of one of my favorite artists using Processing, Jared Tarbell:

One of the recurring tensions within the Processing community was the interaction between users of Macromedia Flash and users of Processing. The reason for that is that these two distinct platforms could be used to create similar output and when looking at the results, newcomers would have certain expectations from Processing as if it was Flash. Often Processing and Flash were compared by professionals and newcomers alike and various resources were created comparing the two. [12][13] Over the years the communities intersected in a lot of areas, mainly by means of sharing code that could be made to work on either platform with some effort. Many artists and programmers working with Flash became familiar with Processing and vice versa.

Beyond the first years

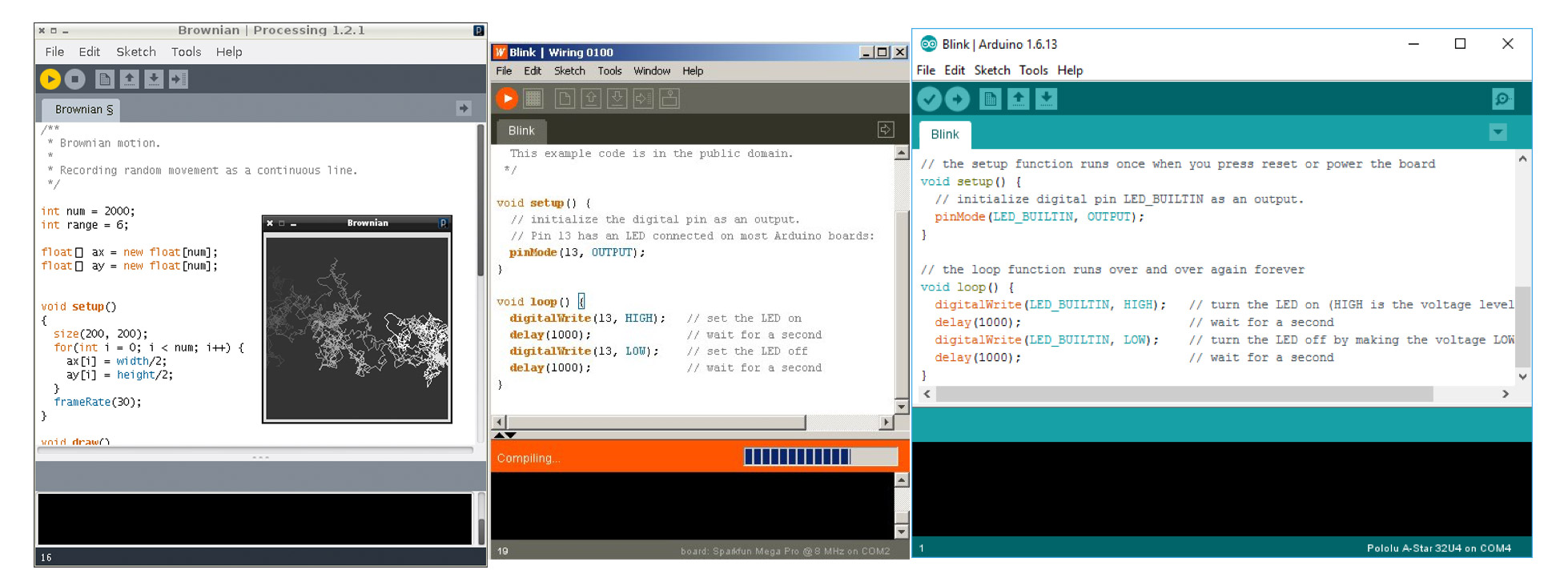

The worldwide impact of Processing started to snowball quickly after its release, changing the landscape of computational arts, visual design and tangible computing over the years that followed. The success of Processing community was replicated a few times by others, with the help and guidance from people from within the Processing community. For example, physical computing development environments like Wiring and Arduino owe it to the foundations laid in Processing by Ben Fry, Casey Reas and contributors.

When Casey Reas was teaching at the Interaction Design Institute in Ivrea[14] (a town in Italy) in 2003, he met Hernando Barragán who got interested in developing Processing-like environment for microcontrollers as a part of his thesis project[15]. Because creators of Processing believed in open source code, they were willing to share their experience and code of the Processing Integrated Development Environment (IDE) in order to build a new IDE for microcontrollers that was called “Wiring IDE”. This new IDE shared almost all of the user interface elements of Processing and worked in a similar way, abstracting away the complexity of programming and compiling software for microcontrollers (Barragan, Wiring: Prototyping Physical Interaction Design, 2004). Wiring IDE and microcontrollers that work with it are still currently in use.

The Wiring website and community behind Wiring also followed similar steps as it happened with Processing. The website shared same type of information as Processing’s site: exhibition, documentation, examples and language syntax were easily accessible. One of the systems behind Processing’s success in educational settings, the courseware package, was also replicated and used for teaching Wiring to new users.

Building on success of Wiring and Processing, one of the supervisors for Barragan’s thesis project, Massimo Banzi wanted to lower the cost of the technology of easily reprogrammable microprocessors, and together with two other people cloned Wiring IDE and published it under a new name, “Arduino”. Barragan recalls the details of this in his article titled “Untold History of Arduino” published in 2016:

In 2005, Massimo Banzi, along with David Mellis (an IDII student at the time) and David Cuartielles, added support for the cheaper ATmega8 microcontroller to Wiring. Then they forked (or copied) the Wiring source code and started running it as a separate project, called Arduino. (Barragan, The Untold History of Arduino, 2016)

Arduino platform has been running in parallel with Wiring, without official cooperation between Arduino team and Hernando Barragan for more than 12 years. Many of the language commands are exactly the same between Wiring and Arduino while they come from Barragan’s research for his thesis:

- pinMode()

- digitalRead()

- digitalWrite()

- analogRead()

- analogWrite()

- delay()

- millis()

The Integrated Development Environments of Processing, Wiring and Arduino also share a lot of similarities even to this day, having a simple interface consisting of tabs, console and minimal menus:

Other kids on the block

As time went on and users got accustomed to Processing, the development of Processing was not going fast enough for some users. This fact pushed those users to create their own derivatives of Processing and a few new platforms were born.

OpenFrameworks, a platform built by Zach Lieberman in 2005 was one of such examples. It provided more powerful features for experienced programmers, yet still followed similar principles and openness of Processing.

Cinder, another tool for creative coding was created by Andrew Bell in 2010 to achieve higher graphical performance than Processing.

Recent developments

Ben Fry and Casey Reas are still very involved in development of Processing to this day. Along with a newer project lead, Daniel Shiffman, they are working on Processing as a software platform, and on expanding the community of users. There are over a dozen of other people working on Processing and there are hundreds of people from around the world being actively involved in expanding the Processing community.

Processing has been ported to many other programming languages and mediums. It has been made to work in Python, Javascript, Android, Actionscript and on other platforms and languages, with P5.js being one of the most actively developed right now. The use of the forum still drives a lot of the conversation within Processing community.

In 2012 Processing Foundation was established to develop and promote distribution of Processing software. The foundation has worked as a vehicle for developing dozens of important features within Processing and improving resources available to its users, specifically by participating in Google Summer of Code beginning in 2011. [16]

Since 2001, over 20 books have been written about Processing by various authors.

Update: Since publishing this essay, a few people from within the Processing community provided their valuable feedback, please follow the thread here:

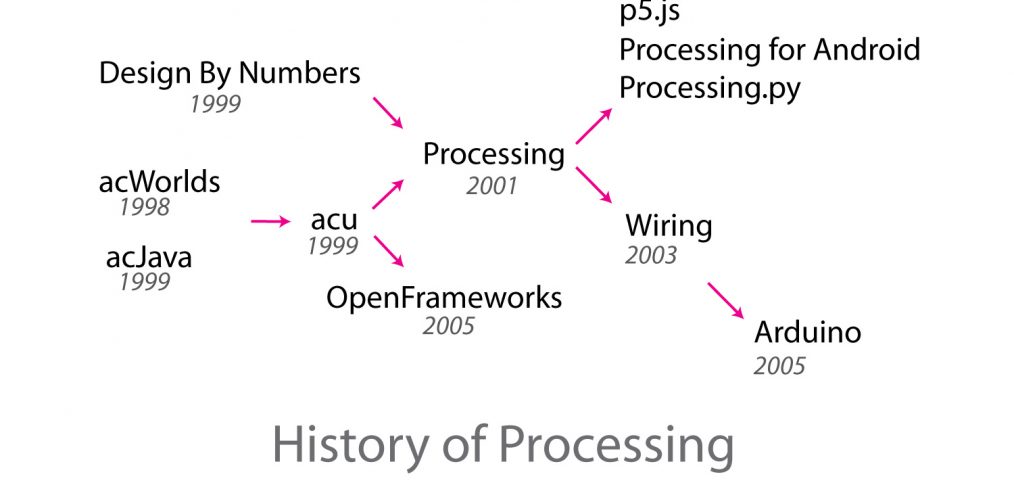

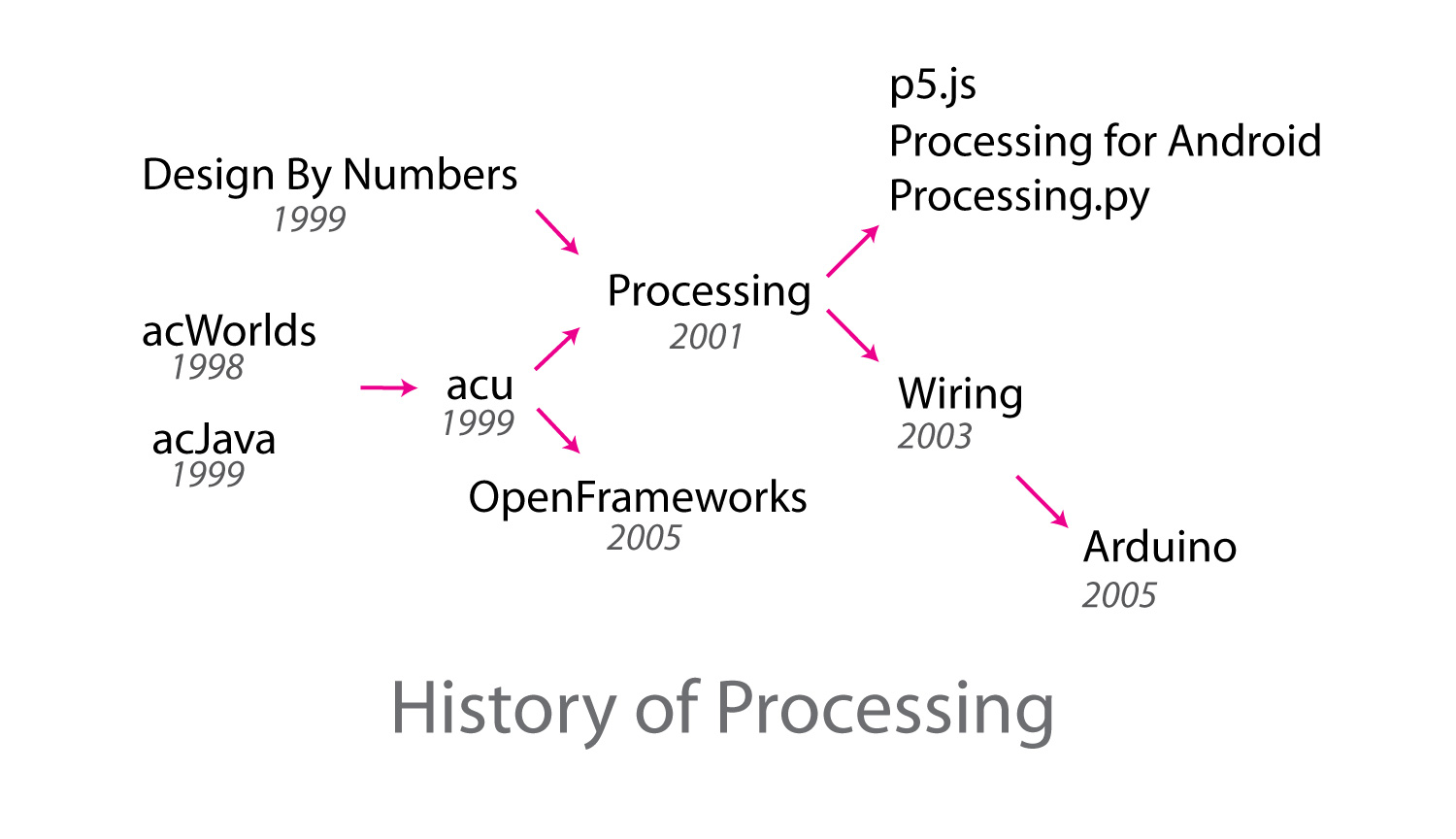

I believe this is a more accurate history. acu and DBN were concurrent. Note acWorlds/acJava acu influence mainly as a counterexample. I also added @openframeworks as a more direct descendent of acu. acu code history can be found here: https://t.co/i9kYviTnaL cc: @jaredschiffman pic.twitter.com/yySu8piXLf

— tom white (@dribnet) March 21, 2018

Origin Story: in early ’99 @johnmaeda was fed up with his grad students endlessly tweaking the code infrastructure (acJava, acWorlds, etc). So he emailed us an “acwhatever” ultimatum telling us to hash out a final version by the end of Jan. The result was the original acu library pic.twitter.com/TpeaeEuae1

— tom white (@dribnet) March 21, 2018

Appendix:

History of Processing in one diagram (includes corrections from Tom White’s tweet referenced above).

Inspiration:

Boden, Margaret & Edmonds, Ernest. (2009). What is generative art?. Digital Creativity. 20. 21-46. 10.1080/14626260902867915.

DeVaul, Richard W. Emergent Design and Image Processing: A Case Study. 1999

http://dada.compart-bremen.de/

Bibliography

Barragan, H. (2004). Wiring: Prototyping Physical Interaction Design. In H. Barragan. Ivrea. Retrieved from http://people.interactionivrea.org/h.barragan/thesis/thesis_low_res.pdf

Barragan, H. (2016). The Untold History of Arduino. Retrieved from Hernando Barragan: https://arduinohistory.github.io/

Behrens, R. (2000). Design By Numbers review. Leonardo, 33(2), 148.

Fry, B. (2004). Tool. Retrieved from Computational Information Design: http://benfry.com/phd/dissertation/6.html

Fry, B. (2004, April). Computational Information Design . Retrieved from Benfry.com: http://benfry.com/phd/dissertation/

Maeda, J. (1999). Design By Numbers. MIT Press.

Maeda, J. (2001). Home Page. Retrieved from Design By Numbers website: http://dbn.media.mit.edu/

Maeda, J. (2005). Design By Numbers . Retrieved from Maeda Studio: http://maedastudio.com/1999/dbn/index.php

References:

[1] https://en.wikipedia.org/wiki/Generative_art

[2] http://dada.compart-bremen.de/item/artwork/890

[3] https://en.wikipedia.org/wiki/Brian_Eno

[4] http://dbn.media.mit.edu/whatisdbn.html

[5] https://github.com/processing/processing/wiki/Project-List#processing

[6] https://en.wikipedia.org/wiki/PILOT

[7] https://vimeo.com/72611093

[8] http://dbn.media.mit.edu/introduction.html

[9] https://processing.org/discourse/alpha/board_Collaboration_action_display_num_1074297082.html

[10] https://processing.org/discourse/alpha/board_Collaboration_action_display_num_1063814266.html

[11] http://www.complexification.net/

[12] https://processing.org/discourse/alpha/board_Syntax_action_display_num_1043423000.html

[13] https://processing.org/discourse/alpha/board_CourseBlueprints_action_display_num_1063988900.html

[14] https://processing.org/discourse/alpha/board_Collaboration_action_display_num_1063814266.html

[15] http://people.interactionivrea.org/h.barragan/thesis/thesis_low_res.pdf

[16] https://processingfoundation.org/advocacy/google-summer-of-code